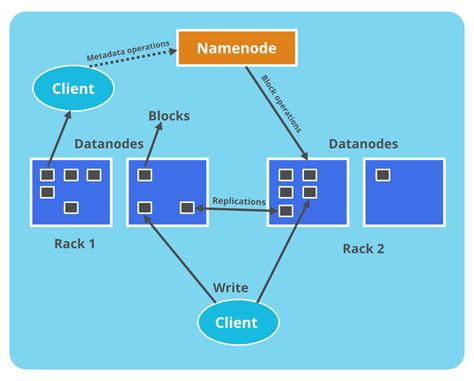

dfs.replication hadoop HDFS is the primary distributed storage used by Hadoop applications. A HDFS cluster primarily consists of a NameNode that manages the file system metadata and . Augstskola/Koledža. "HOTEL SCHOOL" Viesnīcu biznesa koledža. Banku augstskola. Biznesa augstskola Turība. Biznesa, mākslas un tehnoloģiju augstskola "RISEBA". Daugavpils Universitāte. Daugavpils Universitātes aģentūra "Daugavpils Universitātes Daugavpils medicīnas koledža". Eiropas Kristīgā akadēmija. Ekonomikas un kultūras .

0 · what is namenode and datanode

1 · replication factor in hadoop

2 · hadoop namenode format

3 · hadoop master slave architecture

4 · hadoop distributed file system architecture

5 · hadoop block diagram

6 · default replication factor in hadoop

7 · default block size in hadoop

Ormco Enlight LV Orthodontic Light Cure Adhesive Low Viscosity Unidose Kit 740-0299. Orthodontic Adhesives Item Code: 1170343. UOM : Kit. Available Soon. Add to Cart. Flowable low-viscosity paste designed for lingual retainers and indirect bonding. Highly active initiator system. Unidose delivery for easy placement.

Here, we have set the replication Factor to one as we have only a single system to work with Hadoop i.e. a single laptop, as we don’t have any Cluster with lots of the nodes. You need to simply change the value in . HDFS is the primary distributed storage used by Hadoop applications. A HDFS cluster primarily consists of a NameNode that manages the file system metadata and . Initiate replication work to make mis-replicated blocks satisfy block placement policy.Setting dfs.replication to 1 on clusters with fewer than four nodes can lead to HDFS data loss if a single node goes down. If your cluster has HDFS storage, we recommend that you configure .

Changing the dfs.replication property in hdfs-site.xml will change the default replication for all files placed in HDFS. You can also change the replication factor on a per-file basis using the .Specifies the percentage of blocks that should satisfy the minimal replication requirement defined by dfs.replication.min. Values less than or equal to 0 mean not to wait for any particular .

The replication factor for the data stored in the HDFS can be modified by using the below command, Hadoop fs -setrep -R 5 /. Here replication factor is changed to 5 using . You can change the replication factor of a file using command: hdfs dfs –setrep –w 3 /user/hdfs/file.txt. You can also change the replication factor of a directory using .

chloe eong

Hadoop includes various shell-like commands that directly interact with HDFS and other file systems that Hadoop supports. The command bin/hdfs dfs -help lists the commands supported by Hadoop shell. Furthermore, the command bin/hdfs dfs -help command-name displays more detailed help for a command. These commands support most of the normal files . Before head over to learn about the HDFS(Hadoop Distributed File System), we should know what actually the file system is. . DFS stands for the distributed file system, it is a concept of storing the file in multiple nodes in . I have two question about dfs.replication parameter: 1. I know default of replication block is 3.But when I configure dfs.replication=1, Do it affected to cluster performance.. 2. I have a lot of data with configure dfs.replication=1, and now I change configure to dfs.replication= 3.So my data will auto replicate or I have to build my data again to . But changing the replication factor for a directory will only affect the existing files and the new files under the directory will get created with the default replication factor (dfs.replication from hdfs-site.xml) of the cluster. Please see the link to understand more on it. Please see link to configure replication factor for HDFS.

Hadoop has an option parsing framework that employs parsing generic options as well as running classes. . Initiate replication work to make mis-replicated blocks satisfy block placement policy. . The dfs usage can be different from “du” usage, because it measures raw space used by replication, checksums, snapshots and etc. on all the . When I'm uploading a file to HDFS, if I set the replication factor to 1 then the file splits gonna reside on one single machine or the splits would be distributed to multiple machines across the network ? hadoop fs -D dfs.replication=1 -copyFromLocal file.txt /user/ablimit In order to run hdfs dfs or hadoop fs commands, first, you need to start the Hadoop services by running the start-dfs.sh script from the Hadoop installation. . The field is the base size of the file or directory before replication. $ hadoop fs -du /hdfs-file-path or $ hdfs dfs -du /hdfs-file-path dus – Directory/file of the total size . dfs.namenode.replication.min is the setting for the minimal block replication (source: the Hadoop 2.9 documentation), as opposed to dfs.replication.max and dfs.replication (maximal and resp. default block replication). The minimal block replication defines. the minimum number of replicas that have to be written for a write to be successful (from: .

Replication factor can’t be set for any specific node in cluster, you can set it for entire cluster/directory/file. dfs.replication can be updated in running cluster in hdfs-sie.xml.. Set the replication factor for a file- hadoop dfs -setrep -w file-path Or set it recursively for directory or for entire cluster- hadoop fs -setrep -R -w 1 /

The difference is that Filesystem's setReplication() sets the replication of an existing file on HDFS. In your case, you first copy the local file testFile.txt to HDFS, using the default replication factor (3) and then change the replication factor of this file to 1. After this command, it takes a while until the over-replicated blocks get deleted.

Replication of data blocks does not occur when the NameNode is in the Safemode state. The NameNode receives Heartbeat and Blockreport messages from the DataNodes. A Blockreport contains the list of data blocks that a DataNode is hosting. . bin/hadoop dfs -cat /foodir/myfile.txt: FS shell is targeted for applications that need a scripting . Replication of data blocks does not occur when the NameNode is in the Safemode state. The NameNode receives Heartbeat and Blockreport messages from the DataNodes. A Blockreport contains the list of data blocks that a DataNode is hosting. . bin/hadoop dfs -cat /foodir/myfile.txt: FS shell is targeted for applications that need a scripting .Changing the dfs.replication property in hdfs-site.xml will change the default replication for all files placed in HDFS. You can also change the replication factor on a per-file basis using the Hadoop FS shell. . Hadoop Distributed File System was designed to hold and manage large amounts of data; therefore typical HDFS block sizes are . See the Hadoop Commands Manual for more information. COMMAND_OPTIONS : . Initiate replication work to make mis-replicated blocks satisfy block placement policy. . The dfs usage can be different from “du” usage, because it measures raw space used by replication, checksums, snapshots and etc. on all the DNs. .

dfs.replication 3 It is also possible to access Hadoop configuration using SparkContext instance: val hconf: org.apache.hadoop.conf.Configuration = spark.sparkContext.hadoopConfiguration hconf.setInt("dfs.replication", 3)

hadoop fs -D dfs.replication=3 -copyFromLocal file.txt /user/myFolder. As for the under-replication that will be taken care by hadoop itself and you will observe that the number of under-replicated blocks keep on changing. For JAVA API for writing a file with a replication factor refer the following code:About. Data Processing - Replication in HDFS . HDFS stores each file as a sequence of blocks.The blocks of a file are replicated for fault tolerance. The NameNode makes all decisions regarding replication of blocks. It periodically receives a Blockreport from each of the DataNodes in the cluster. A Blockreport contains a list of all blocks on a DataNode. You can use the below commands to set replication of an individual file to 4 . hadoop dfs -setrep -w 4 /path of the file . The below command will change for all the files under it recursively.To change replication of entire directory under HDFS to 4: hadoop dfs -setrep -R -w 4 /Directory path. Hope that helps

In Hadoop dfs there is no home directory by default. So let’s first create it. Syntax: . Example 2: To change the replication factor to 4 for a directory geeksInput stored in HDFS. bin/hdfs dfs -setrep -R 4 /geeks. Note: The -w means wait till the replication is completed.

For changing replication factor of a directory : hdfs dfs -setrep -R -w 2 /tmp OR for changing replication factor of a particular file. hdfs dfs –setrep –w 3 /tmp/logs/file.txt When you want to make this change of replication factor for the new files that are not present currently and will be created in future. Changing dfs.replication will only apply to new files you create, but will not modify the replication factor for the already existing files. To change replication factor for files that already exist, you could run the following command which will be run recursively on all files in HDFS: hadoop dfs -setrep -w 1 -R /

The rate of replication work is throttled by HDFS to not interfere with cluster traffic when failures happen during regular cluster load. The properties that control this are dfs.namenode.replication.work.multiplier.per.iteration (2), dfs.namenode.replication.max-streams (2) and dfs.namenode.replication.max-streams-hard-limit (4).The foremost . Let's think through this. We have a min replication and this is typically 3. Why have a max? Maybe you do a lot of maintenance and regularly take a node out of the cluster. As a solution, you’ll configure the DFS Replication to ensure the added content gets replicated to all other servers for high availability. To configure the DFS Replication: 1. On the DFS Management in the DCR1 server, right-click on Replication, and select New Replication Group to initiate adding a new replication group.

Even if you specify dfs.replication as 0 in the client configuration or the command itself (as hadoop fs -Ddfs.replication=0 -put testfile.txt /) while copying files to HDFS, the command will fail, But you will a weird message as follows

I've finished some tests and found out the reason. At first, when you create a file its replication factor must be higher or equal to dfs.replication.min. HDFS provides that the replication up to dfs.replication.min nodes is synchronous, replication to the rest of the nodes (dfs.replication - dfs.replication.min) is being processed asynchronously.

To add datanode check below. Copy core-site.xml, hdfs-site.xml, mapred-site.xml, yarn-site.xml and hadoop-env.sh files to new machine hadoop dir. Add ip address or hostname in /etc/hosts. Add ip address of the new datanode in slaves file (located in /etc/hadoop/). As you mentioned you have 2 hdd, mention those locations in hdfs-site.xml file like below .

what is namenode and datanode

52 ratings. Equipment Guide. By Bones Barlow. An extensive guide to the weapons, armor, and flair in Epic Battle Fantasy 4, including their locations and what's needed to fully upgrade them. Award. Favorite. Share. Bows. Swords. Staves. Guns. Hats (Male) Armor (Male) Hats (Female) Armor (Female) Flair, part 1. Flair, part 2. .

dfs.replication hadoop|hadoop block diagram